- Engineering

- Measuring systems

- Machine qualification

-

Components

-

Systems

-

Learn more

-

- Expertise

-

About IBS

-

Our Story

-

Learn More

-

At IBS Precision Engineering we specialize in precision engineering, harnessing the power of metrology to achieve unparalleled accuracy in our work. This FAQ page is designed to provide you with answers to some of the most common questions about metrology in precision engineering.

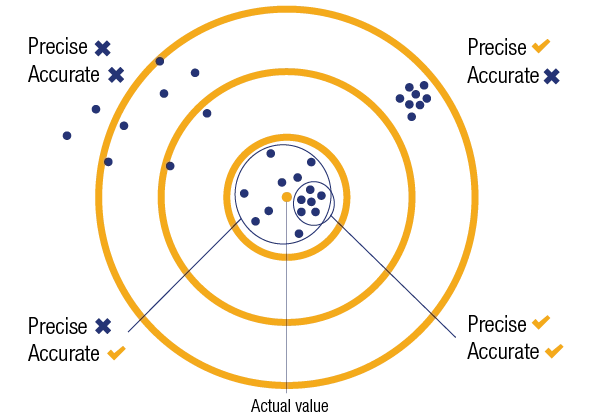

The accuracy of a measurement is a qualitative indication of how closely the result of a measurement agrees with the true value of the parameter being measured. Because the true value is always unknown, accuracy of a measurement is always an estimate. An accuracy statement by itself has no meaning other than as an indicator of quality. It has quantitative value only when accompanied by information about the uncertainty of the measuring system. The accuracy of a measuring instrument is a qualitative indication of the ability of a measuring instrument to give responses close to the true value of the parameter being measured. Accuracy is a design specification and may be verified during calibration. The accuracy of most instrumentation, is dependent on the accuracy of the device, or method used for calibration. Over time the accuracy can change. To preserve the right accuracy, proper re-calibration of instruments or tools is needed within a certain time frame.

Precision is a property of a measuring system or instrument. Precision is a measure of the repeatability of a measuring system – how much agreement there is within a group of repeated measurements of the same quantity under the same conditions. It refers to the closeness of agreement between measurement results, and indicates how consistent a process is. The better the precision the lower the difference amongst the values showing that the results are highly repeatable. High precision is only achieved with high quality instruments and careful work. Precision is usually expressed in terms of the deviation of a set of results from the arithmetic mean of the set.

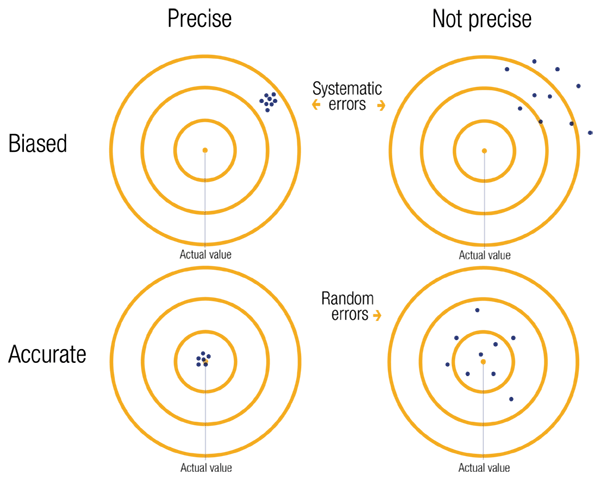

Precision and accuracy are unrelated to each other, meaning that you can be very precise but not accurate or vice versa. Precision is also used as a synonym for the resolution of the measurement e.g. a measurement that can distinguish the difference between, 0.01 and 0.02 is more precise (has a greater resolution) than one that can only tell the difference between 0.1 and 0.2 even though they may be equally accurate or inaccurate.

Repeatability and reproducibility are two aspects of precision. Describing the minimum variability of precision, repeatability identifies variations that occur when conditions are constant and the same operator uses the same instrument within a short period of time. In contrast, reproducibility describes the maximum variability of precision where variations occur over longer time periods with different instruments and different operators.

Repeatability and reproducibility are two aspects of precision.

Describing the minimum variability of precision, repeatability identifies variations that occur when conditions are constant and the same operator uses the same instrument, in the same location within a short period of time. Repeatability is expressed in the following statistical quantities: mean, standard deviation or the standard deviation of the mean. The smaller the number, the higher the repeatability, and the higher the reliability of the results.

Reproducibility is an important part of estimating uncertainty in measurement. It describes the maximum variability of precision where variations occur over longer time periods with different instruments, different locations or different operators. It measures the ability to replicate the findings of others. In other words: a reproducibility condition of measurement is another repeatability test where one or more conditions of measurement have been changed, to evaluate the impact it has on the measurement results.

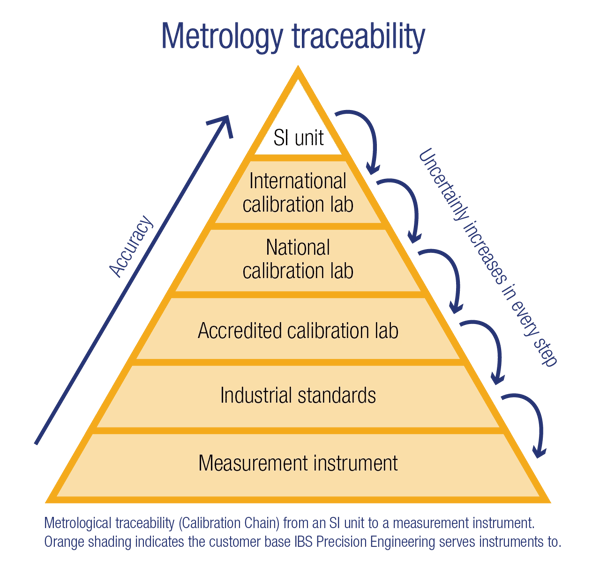

Metrology traceability is a property of a measurement result whereby the result can be related to a reference through a documented unbroken chain of calibrations, each contributing to the measurement uncertainty. In a much simpler understanding, traceability is the series of comparison with the instrument’s measurement results (measured value and uncertainty) to the value of a higher accuracy standard that is linked to a more accurate standard until it reaches International Standards or SI.

The metrological traceability chain is 'the sequence of measurement standards and calibrations that are used to relate a measurement result to a reference'. It links every reference standard used in an order from high to low accuracy values until it reaches the lowest chain. When one chain is cut along the way, traceability is also disconnected in that part. You can no longer trace it back to the top chain.

The traceability pyramid presents the hierarchy of every reference standard and the size (magnitude) of the provided uncertainty. The higher the location of the reference standard in the pyramid, the smaller the measurement uncertainty it can provide, therefore, the more accurate the standard. Note: traceability is referring to the “result of measurement”, not the instrument, the standard, or even the calibration performed.

Calibration is the process of adjusting an instrument or measurement system to ensure that it provides accurate and reliable results. Calibration is typically done by comparing the readings of the instrument or system with known or traceable standards, and making adjustments as necessary to bring the readings into agreement with the standards. The purpose of calibration is to ensure that the instrument or system is performing within its specified accuracy and to minimize measurement uncertainties. Calibration is performed with the item being calibrated in its normal operating configuration – as the normal operator would use it.

The result of a calibration is a determination of the performance quality of the instrument with respect to the desired specifications. This may be in the form of a pass/fail decision, determining or assigning one or more values, or the determination of one or more corrections. Calibration is performed according to a specified documented calibration procedure, under a set of specified and controlled measurement conditions, and with a specified and controlled measurement system.

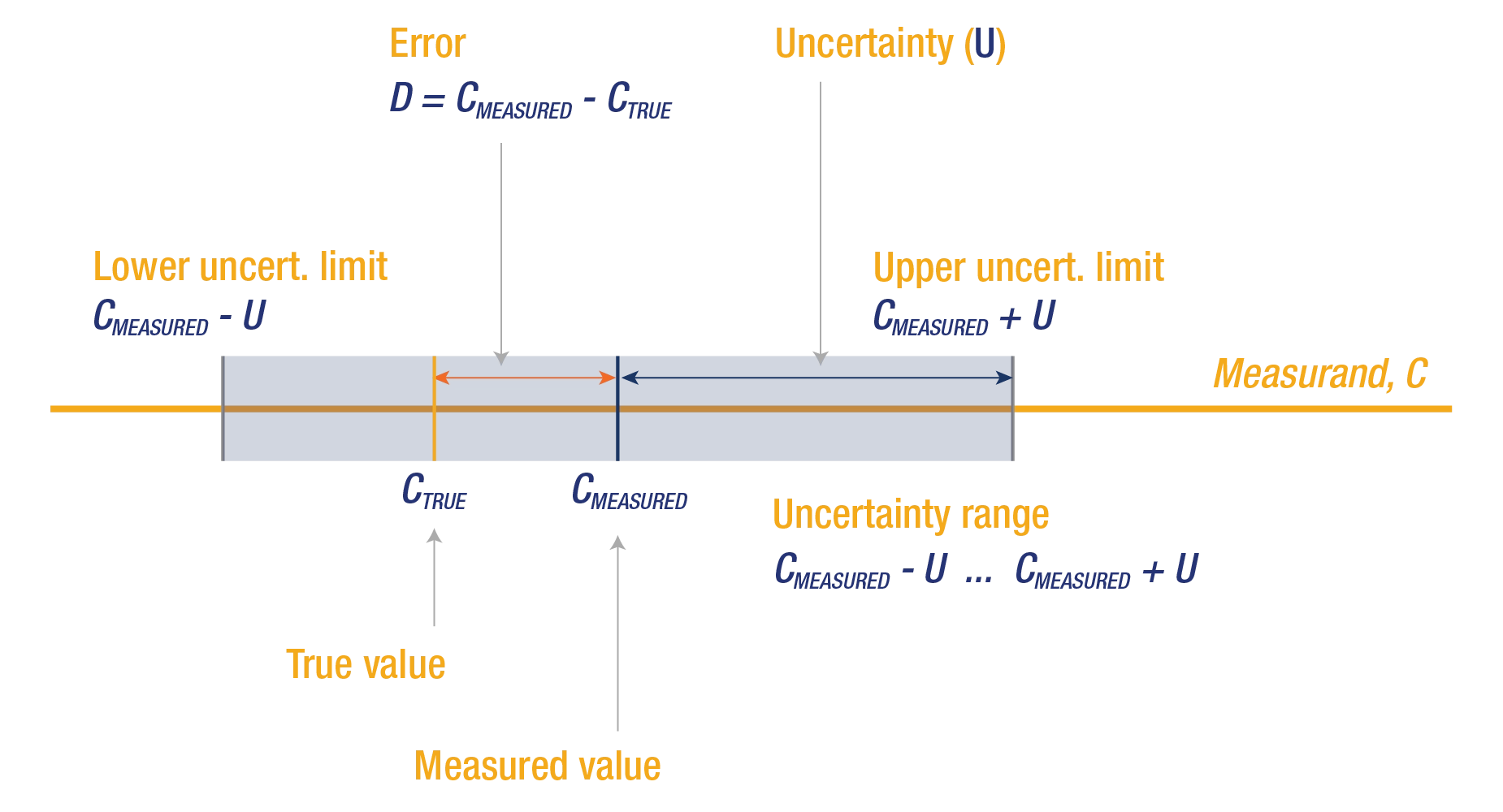

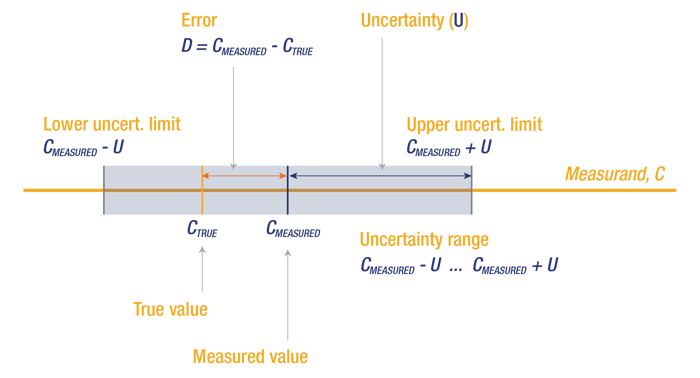

In metrology, error (or measurement error) is an estimate of the difference between the measured value and the probable true value of the object of the measurement. The error can never be known exactly; it is always an estima te. Error may be systematic and/or random.

A systematic error is the mean of a large number of measurements of the same value minus the (probable) true value of the measured parameter. Systematic error causes the average of the readings to be offset from the true value. Systematic error is a measure of magnitude and may be corrected. Systematic error is also called bias when it applies to a measuring instrument.

Systematic errors

Random error is the result of a single measurement of a value, minus the mean of a large number of measurements of the same value. Random error causes scatter in the results of a sequence of readings and, therefore, is a measure of dispersion.

Random errors:

Measurement uncertainty is the non-negative parameter characterizing the dispersion of the quantity values being attributed to a measurand, based on the information used. In simpler terms, measurement uncertainty is the estimate of the range of values within which the true value of a measured quantity is likely to fall. Measurement uncertainty and accuracy are closely related but distinct concepts. Accuracy refers to how close a measured value is to the true value, while measurement uncertainty is an estimate of how much the measured value might differ from the true value due to factors such as random errors, systematic errors, and limitations of the measurement method or instrument. A measurement can be accurate but have a high uncertainty if the range of possible values is large. On the other hand, a measurement can be less accurate but have a lower uncertainty if the range of possible values is small.

To determine measurement uncertainty, several factors must be considered.

Guidelines such as the "Guide to the Expression of Uncertainty in Measurement" (GUM) provide a framework for estimating and reporting measurement uncertainty.

When selecting the right measurement instrument for a specific task, several key considerations should be taken into account:

Future Expansion and Upgrades: Anticipate future needs and potential growth. Determine if the instrument allows for expansion or upgrades, such as additional modules, accessories, or software capabilities. This flexibility can be advantageous as your measurement requirements evolve over time.

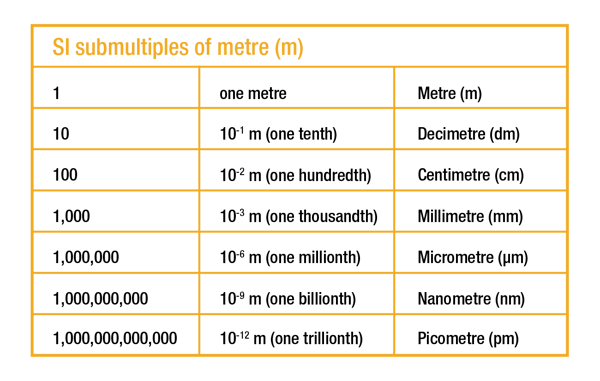

A picometre is a unit of length in the International System of Units (SI) . It is equal to one trillionth (1/1,000,000,000,000) of a metre or 10^(-12) metres. The symbol for the picometre is 'pm'. It is incredibly small and is typically used to measure the size of atoms, molecules, and their interactions.

To give an idea of its size: